Sep 2025

Generative AI has already transformed how text, images, and video are created.

But a deeper shift is emerging—one that moves beyond producing isolated outputs toward generating entire environments that function, react, and evolve.

This new direction can be understood as Text-to-Living World:

AI that takes a line of natural language and uses it to construct not just scenes, but living, operational worlds with objects, logic, physical rules, and interaction patterns.

NVIDIA’s Omniverse platform and recent research in text-guided 3D environment generation illustrate that this concept is no longer abstract. It is becoming a foundational technology for how companies design, test, operate, and deliver experiences in the future.

How a Line of Text Can Define a World

The scope of generative AI is expanding rapidly.

What used to be a request for an image or short clip is evolving into a system that interprets text as a blueprint for:

scenes → environments → experiences → worlds.

In this progression, text becomes a specification language that defines how a world should be structured and how it should behave.

A prompt such as

“Create a nighttime warehouse environment suitable for robotic navigation”

can now generate a 3D world with lighting conditions, navigable surfaces, object placement, and physics simulation—fully compatible with training or testing tasks.

This marks a shift from generating visual outputs to generating functional spaces that support interaction, exploration, and intelligent behavior.

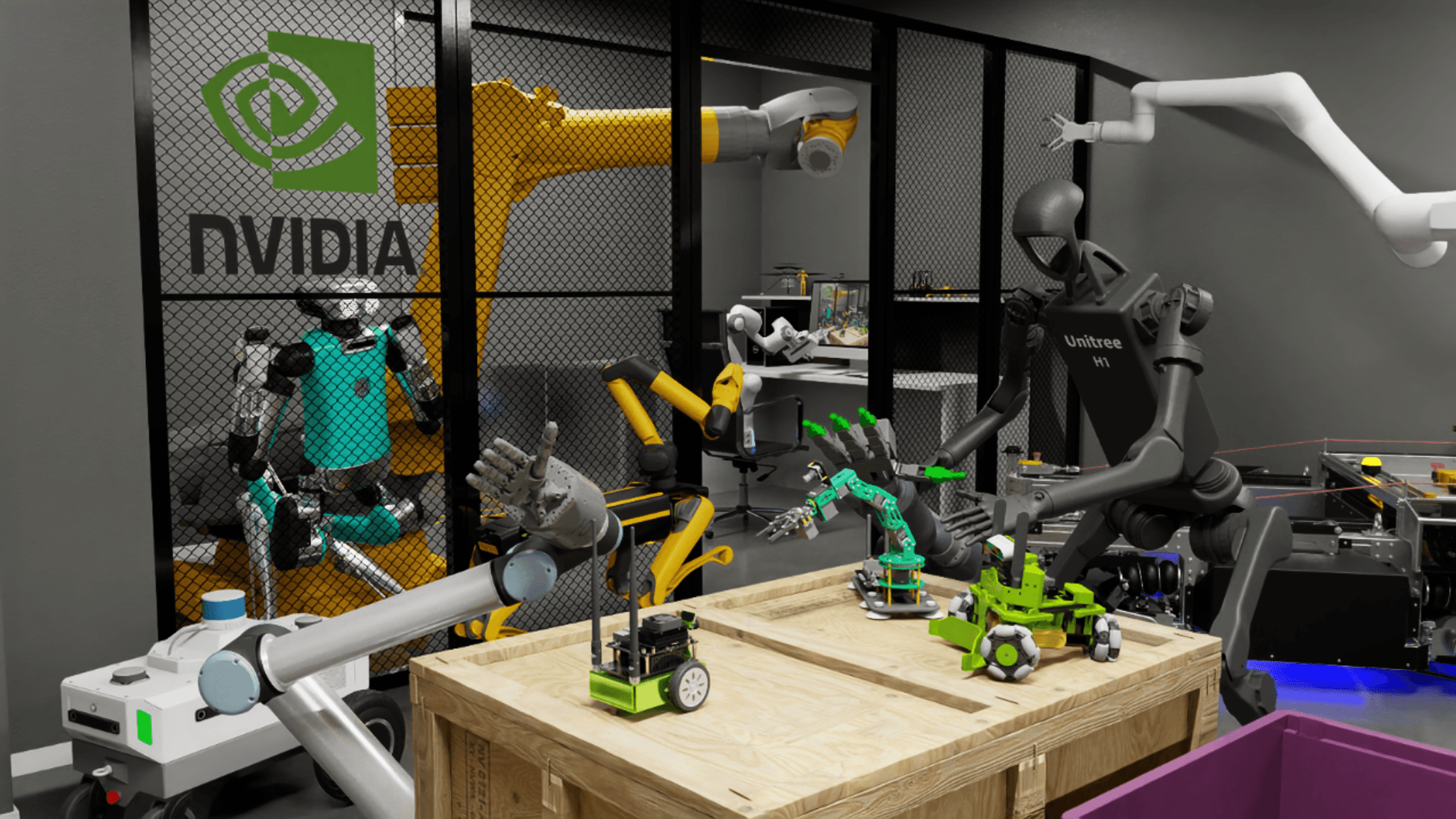

NVIDIA Omniverse and the Rise of World-Scale AI Simulation

Source: NVIDIA, https://docs.isaacsim.omniverse.nvidia.com/latest/index.html

NVIDIA Omniverse goes far beyond a 3D creation tool.

It is a simulation platform designed to build industrial-grade digital twins—virtual environments that mirror real-world physics and constraints with high fidelity.

Enterprises across manufacturing, logistics, construction, and robotics use Omniverse to reconstruct real environments and run AI or robots inside them as if they were operating in the physical world.

With tools such as Isaac Sim, Omniverse enables:

text-guided scene construction

procedural environment generation

realistic physics and material interaction

robotics behavior training in simulated worlds

This forms one of the clearest technological pillars of Text-to-Living World:

AI can acquire the environment it needs by generating the world first, before learning how to act within it.

Research Momentum Behind Text-Based 3D Environment Generation

Academia is moving in the same direction.

Studies on text-guided 3D world creation show how natural language can define not only visual layout but also functional, interactive properties.

One representative example is

“DreamCraft: Text-Guided Generation of Functional 3D Environments.”

This research demonstrates how AI can translate natural language descriptions into 3D spaces that include physics, collision rules, manipulable objects, and spatial logic.

The emphasis is not on aesthetics alone, but on functional validity—environments that agents can navigate, use, and interact with.

This growing body of research provides the technical groundwork for Text-to-Living World, showing that AI can design spaces that operate according to meaningful rules and can be used for testing, gameplay, simulation, or training.

What This Means for Enterprise Innovation

Text-to-Living World technology is not confined to R&D labs.

Its implications stretch across product development, operations, customer experience, and digital transformation.

Companies can shift from building and testing in physical environments to experimenting in AI-generated worlds first.

These worlds can replicate complex scenarios, support rapid iteration, and eliminate the cost or risk of real-world trials.

Brand experiences can evolve from static digital content to spatial, interactive experiences, where AI Personas act inside spaces that respond to users.

This opens the door to new business models:

virtual testing grounds, immersive commerce, AI-powered entertainment spaces, simulated training arenas, and more.

AI-generated worlds are poised to become a new layer of enterprise infrastructure.

From Persona to World-Scale Presence

Morphify has focused on building expressive, consistent, and highly controllable AI Personas.

Text-to-Living World technology introduces the next natural step in that evolution.

A Persona no longer has to exist only on a screen.

With world-generation capabilities, the Persona can occupy a dynamic environment that:

reacts to user behavior

supports movement and interaction

adapts to narrative or brand context

and evolves as the AI learns

Morphify Anima RT brings the behavioral and physical execution layer,

while Text-to-Living World provides the stage, environment, and context in which that Persona can act.

Together, they outline a future where experiences are not simply viewed—they are lived, shaped, and navigated within AI-generated worlds.

Reference

NVIDIA. Omniverse Platform Overview and Isaac Sim technical documentation.

Wu, Z., Zhou, W., Sun, J., et al. “DreamCraft: Text-Guided Generation of Functional 3D Environments,” arXiv preprint arXiv:2404.15538 (2024) https://arxiv.org/abs/2404.15538