Oct 2025

AI is shifting from systems that simply respond to queries into entities capable of understanding goals and executing tasks autonomously. This emerging class of AI—commonly described as AI Agents—moves beyond text generation and toward a structure that integrates planning, tool use, action, and iterative refinement.

This evolution is not only a technical milestone but also a redefinition of how AI contributes to real operations, shaping both digital workflows and the emerging boundary between virtual and physical environments.

The Essence of an AI Agent: From Reaction to Execution

OpenAI’s documentation frames AI Agents as

“systems designed to plan and act in service of a user’s goal.”

In practice, this means an agent does far more than produce a single response.

It interprets intent, organizes the work required to achieve it, and completes tasks end to end.

The shift from reactive output to autonomous execution represents a fundamental change in how AI participates in enterprise processes.

Core capabilities include:

Interpreting complex goals beyond a single prompt

Structuring tasks into actionable sequences

Selecting and operating tools—APIs, browsers, services—to produce results

Maintaining context and adjusting plans based on intermediate outcomes

Together, these abilities enable agents to function not as chat interfaces,

but as autonomous executors capable of completing meaningful work.

The Rise of Collaborative AI: Agents Working as a Team

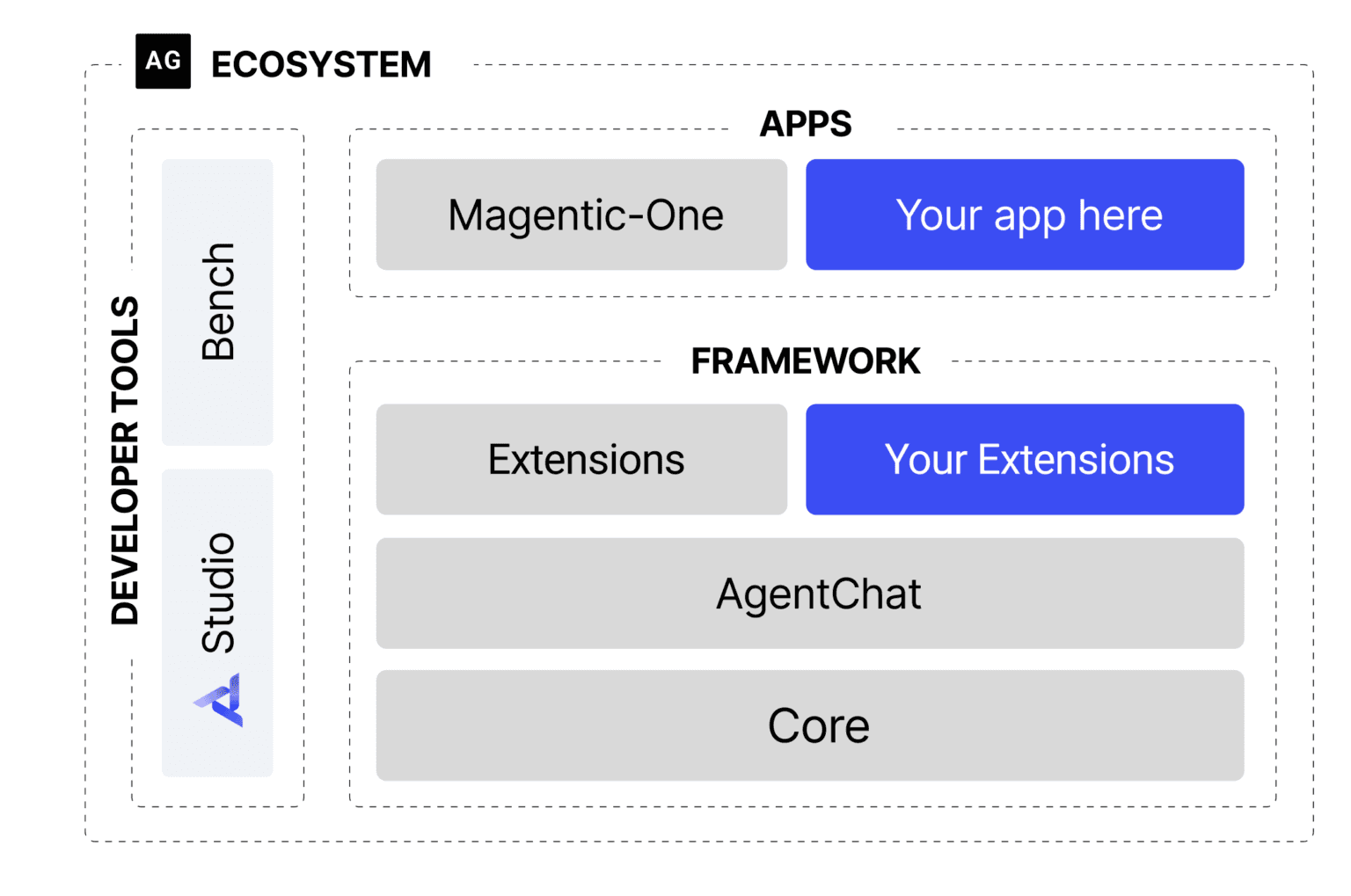

Source: Microsoft Autogen, https://www.microsoft.com/en-us/research/project/autogen/

Microsoft’s AutoGen framework demonstrates how multiple agents can collaborate to solve complex problems.

This approach moves beyond single-model operation and into a structure that resembles a coordinated AI team.

Within a multi-agent system:

Agents divide roles such as research, analysis, and execution

Decisions evolve through dialogue and negotiation among agents

Human oversight can be introduced at critical points for safety or quality

This collaborative structure allows enterprises to automate tasks that previously required several human contributors, creating a more flexible and resilient automation layer.

In essence, AutoGen illustrates a future where automation is not driven by a single system,

but by orchestrated intelligence across multiple specialized agents.

Expanding into the Physical World

Although AI Agents today are most often applied in digital workflows—research, document creation, analysis, data transformation—their structure naturally extends into physical environments.

The same loop that governs digital execution—goal identification, planning, tool use, context management—maps cleanly onto robotics and embodied systems.

As a result, AI Agents are increasingly aligned with Embodied AI and Physical AI research.

In physical contexts, agents can:

Interpret sensory data from robots

Adjust plans in response to environmental changes

Select and execute movements or actions

Evaluate outcomes and refine the next step

This demonstrates a clear progression:

digital automation → physical execution → situational autonomy.

The line between digital and physical intelligence is becoming increasingly permeable.

Why Enterprises Are Adopting AI Agents

For enterprises, AI Agents represent more than incremental efficiency.

They enable a structural rethinking of operations.

Key impacts include:

Reduced operational load through task-level automation

Acceleration of complex knowledge workflows

Multi-agent structures that mirror human teams

Lower operating costs and faster turnaround

Consistent experiences across online and offline touchpoints

As organizations shift toward AI-driven operations,

Agents provide the foundation for a unified intelligence layer that touches every channel and environment.

From Persona → Agent → Real-World Action

Morphify has built its foundation on high-quality AI Personas—entities capable of expressing personality, brand voice, and behavioral nuance.

When these Personas are combined with Agent capabilities,

they evolve into systems that not only speak and understand,

but also interpret goals, make decisions, and execute tasks.

With Morphify Anima RT, these capabilities extend even further,

connecting digital agents to physical execution.

A single Persona can interact naturally with users, plan and execute tasks,

and ultimately operate through robotics in real environments.

This positions Morphify within a broader progression—

Embodied behavior, world understanding, and autonomous action—

aligning with the future direction of integrated AI experiences.

Looking Ahead: A Unified Framework for Actionable Intelligence

AI Agents are poised to play a central role in the next generation of AI systems.

Their ability to understand environments, choose actions, and refine strategies transforms AI from a tool into a goal-driven operational entity.

Morphify is building toward this direction by connecting:

Persona-level interaction,

Agent-level execution, and

Physical AI capabilities,

into a single, cohesive continuum.

This marks the beginning of a paradigm where AI operates consistently across digital, virtual, and real-world settings—enabling organizations to build experiences and operations that were not previously possible.

Reference

OpenAI. “Agents,” OpenAI Platform Documentation.

Microsoft Research. “AutoGen Framework for Multi-Agent AI Systems,” Official GitHub Docs.