Oct 2025

AI has long been confined to digital environments—generating text, creating images, analyzing data, and executing tasks entirely within screens and interfaces.

However, the emergence of Physical AI marks a shift toward systems that can move, perceive, and act within the physical world.

Across sources such as NVIDIA and Encord, Physical AI is consistently characterized as an evolution where AI becomes capable of understanding real environments and performing physical actions. This transformation is made possible by combining Embodied AI, robotics, simulation, and behavior models into a unified intelligence capable of operating in real-world settings.

What Physical AI Represents

Physical AI is more than robotic control; it is an integrated form of intelligence that can interpret physical environments, make decisions, and execute actions. NVIDIA describes Physical AI as a convergence of graphics, modeling, robotics, and behavioral AI into a single system.

Several elements define this new category of intelligence:

Simulation-based learning: training policies within accurate physics environments

Perception and interpretation: processing sensor and camera data from the real world

Planning and reasoning: choosing strategies to achieve physical goals

Actuation: performing movements and interactions through robots

Encord emphasizes that Physical AI emerges when learning, reasoning, and physical interaction are fused—particularly through reinforcement learning, motion policies, and Sim2Real techniques.

In essence, Physical AI is about enabling digital intelligence to operate reliably in real environments, despite their unpredictability.

Why Simulation and Robotics Make Physical AI Possible

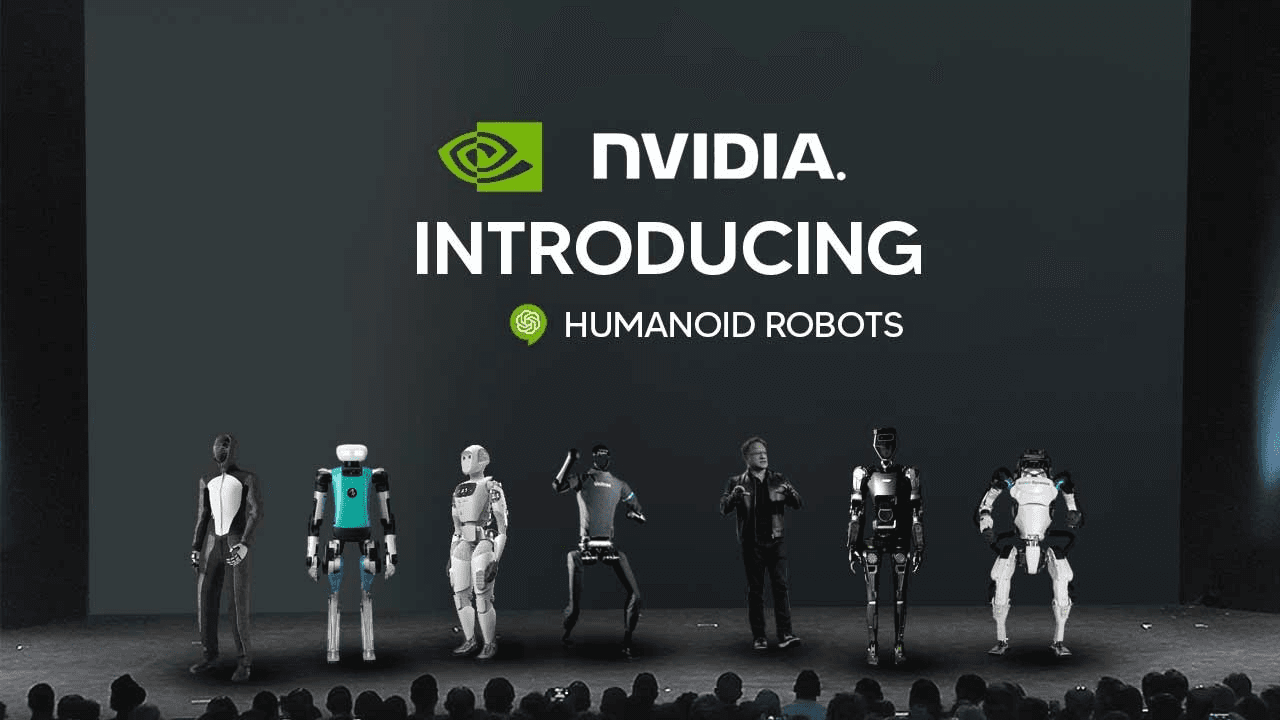

Soruce: NVIDIA, Jensen introduces robotic friends at GTC'24.

Real-world execution requires AI to handle ambiguity, noise, and dynamic conditions—challenges that purely digital systems never face.

To address this, NVIDIA is integrating PhysX-based simulation, Omniverse tooling, and robotics policies into a unified training pipeline. In these environments, AI can conduct millions of iterations of behavioral learning across realistic physical simulations:

balancing, manipulating objects, avoiding obstacles, and interpreting complex environments.

Once trained, these behaviors transfer to real robots through Sim2Real techniques, adapting to changing physical conditions with minimal degradation. Encord highlights that this method is essential because it allows AI to refine its behavior before it ever enters the real world.

Physical AI is therefore built on a tightly connected loop:

simulation → intelligence → real-world execution.

How Physical AI Is Reshaping Industries

Physical AI opens new possibilities in industries that rely on physical labor, real-time decisions, or precise interactions—manufacturing, logistics, retail, hospitality, and entertainment.

Its impact can be seen in several key shifts:

Replacing repetitive manual tasks with consistent automated execution

Enhancing safety and accuracy in hazardous or precision-dependent environments

Integrating decision-making into physical operations

Enabling robots to interact naturally with people

These changes signal that AI is no longer limited to digital augmentation. It is becoming an integral part of real-world operations, where intelligence and physical capabilities converge.

Extending Persona and Agent Intelligence Into the Physical World

Morphify has long focused on building high-fidelity AI Personas and Agent-driven execution systems—entities capable of understanding, responding, and expressing nuanced behavior. The rise of Physical AI expands these capabilities beyond digital interactions. Persona behavior, decision patterns, and conversational identity—traditionally confined to screens—can now extend into real environments through robotics.

Morphify Anima RT plays a central role in this transition. By linking Persona intelligence with real-world actuation, it enables a unified structure in which AI can understand context, plan actions, and perform them physically. As a result, a single AI entity can move fluidly from dialogue to interpretation, from strategic reasoning to real-world behavior. Morphify sees Physical AI not as a separate domain, but as the next natural layer that connects digital presence, agent execution, and embodied action into a coherent experience.

Looking Ahead: AI Becoming a Real-World Actor

Physical AI marks a turning point in how AI will exist and operate in the future.

As models evolve to interpret environments, choose actions, and adapt strategies in real time, AI becomes less of a tool and more of a participant in the world around us.

The ability to blend world understanding, physical action, and digital reasoning will increasingly define next-generation systems. Morphify is aligned with this direction—integrating Persona-level interaction, Agent-level execution, and Physical AI capabilities into a unified continuum. This integrated approach lays the foundation for AI to deliver consistent intelligence across digital, virtual, and real-world contexts, shaping a new paradigm in how experiences and operations are built.

Reference

NVIDIA Research Shapes Physical AI

Encord. “What Is Physical AI?”